“Quality is never an accident. It is always the result of intelligent effort.” John Ruskin

Which LQA process is right for you?

Before jumping into streamlining your localization QA project, let’s think about its scope and testing process for a minute.

Even though the focus of globalization managers is usually on linguistic testing and translation quality review, localization QA can also involve UI/UX evaluation and functional or compliance testing. Naturally, the more the project can cover the better, but there’s a cost.

>> New to LQA? Check out what localization QA is and how it can be helpful

The cost is deeply influenced by the performance of the localization testing process implemented. For example, resources needed for checking text in a spreadsheet will be significantly lower compared to running full functional testing of the latest build for a just-released game title.

Likewise, the product under assessment itself has a vast influence on how we execute the QA testing process. Consider sending testers a simple link to start their job in comparison with having to spare 16GB of their hard disk and go through a 20-minute installation.

Localization QA testing using screenshots

If you want to get the best of the two worlds of linguistic and visual testing and yet perform at high speed and a reasonable budget, then using a combination of texts and screenshots, or if need be sounds or videos, is definitely a path to consider.

Particularly when we talk about application, mobile and game testing. The reason isn’t only that it’s fast to check text and a related screenshot and move on to the next one, it also helps with situations taking some time to reproduce when performing live testing.

>> Discover how Rovio accelerated LQA 4x by using screenshots

#1: Unified environment for LQA testing

Key factors to consider when putting together a setup for a streamlined LQA project are how parts of your LQA process are unified, how LQA is connected with the product you assess, and how you can collaborate with your stakeholders.

Unified testing, logging, and processing

For an LQA team, an interface where testing occurs is needed as well as a place where results and issues are logged and processed. When these two interfaces are separate, then testers will need to switch back and forth. The testing process will take longer and there’ll be potential for drop-outs or miscommunication.

Unifying testing and issue processing in one environment would mean testers can log everything instantaneously without the need to switch. Therefore, if your product or technology allows it, strive for testing and issue logging in one interface.

LQA unified with translation and content management

Even more powerful is embedding LQA into your overall localization process and content pipeline as much as possible. After all, localization QA assurance provides feedback on translation and content production.

As the last stage of a localization process, LQA triggers a row of updates. The deeper you connect your QA testing with translation and content management, the better for all.

Having your “pipe” unified will help you better manage updates during the process and flexibly prepare batches to work on. Plus, communication with translators, content editors, or developers will be a lot easier since you won’t have to send data or content back and forth to sync different systems and teams.

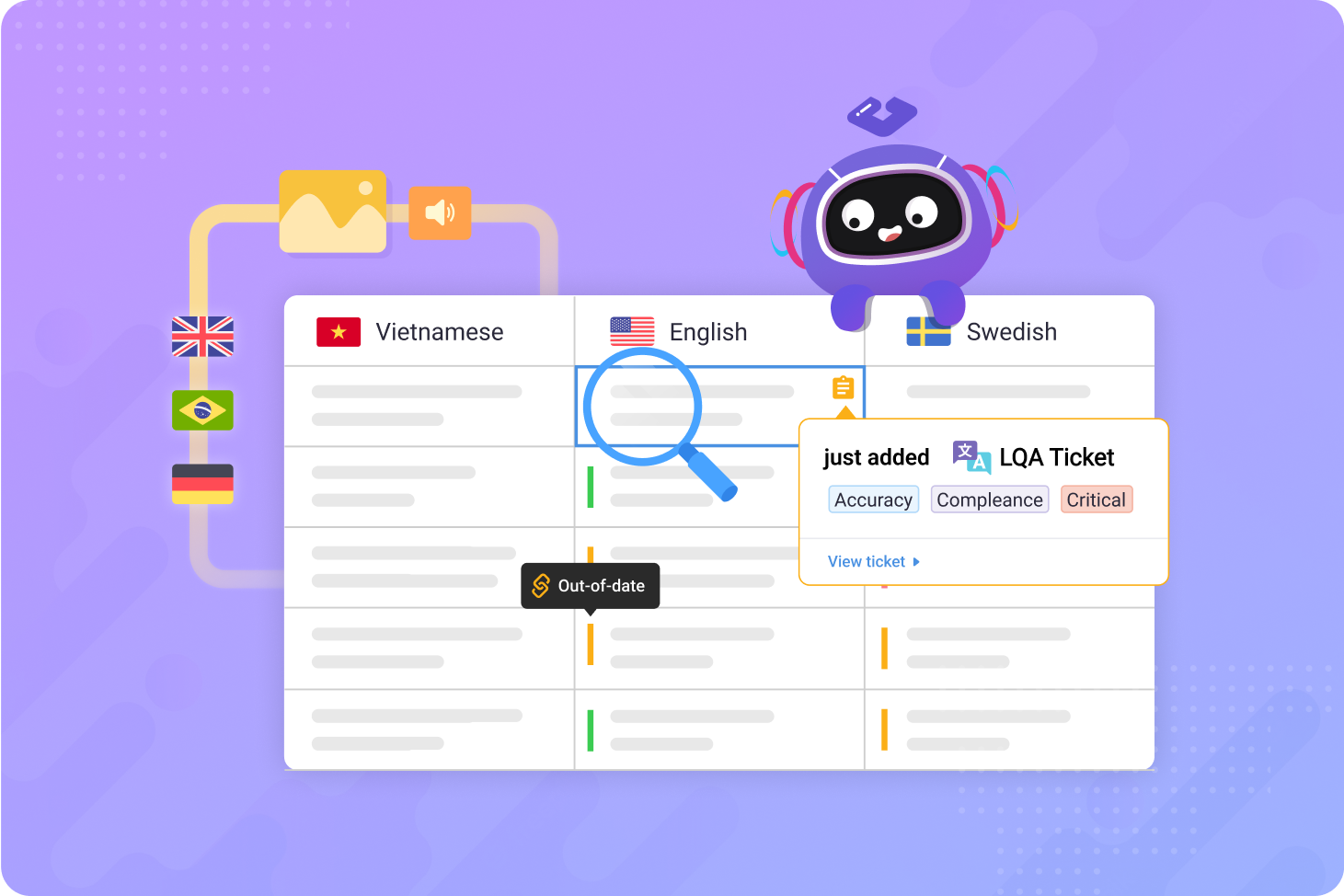

Unifying across languages

Although you might want to prefer to divide responsibilities in your LQA team on a language-pair basis, access to all languages and related content is where the streamlining occurs.

An interface with all language versions in a single view makes the overall management of the project much easier. Rather than managing LQA on a language-pair level, you want to employ the ability to manage one language “grid” representing our overall project.

Tackling cross-language issues

Working on a grid basis, as opposed to a language-pair basis, will enable your LQA team to identify issues impacting multiple languages. Testers can cross-check between languages and if anyone spots an issue, they can quickly verify how many languages are affected. As a result, you can avoid duplicated reports of the same issue.

#2: Running a continuous process

So you have your pipeline unified, how can we make LQA even better? By optimizing the internal process. You want to perform the testing pass in a shorter time span, yet with great quality. Even though these two KPIs are contradictory, there’s one key that can help you with both of them: a continuous LQA process.

Continuous LQA doesn’t wait for development and translation to be done, it starts right away when they produce some results. Then continues running on increments added to your pipe as development and translation of your product progress, until everything is done.

You can ship sooner by working continually, however, there are two conditions you should follow to succeed—clearly define what’s ready for LQA and filter out this content within your pipeline.

Once your content status is updated as ready for LQA, then adding the objects to your current LQA queue should be as automated as possible. If getting the content together takes too much time, then you have a blocker to overcome when optimizing your flow to run continuously.

>> Learn more about Localization Quality Assurance in Gridly

#3: Clear guidance

Having clear assessing criteria to follow consistently during testing is super important. Otherwise, the results of your LQA efforts might be inconclusive. Here’s where implementing an LQA model will help.

LQA model selection

LQA models enable you to structure the feedback given by testers, evaluate localization quality, and categorize localization issues. There are a bunch of standardized models commonly used by LQA teams, such as LISA, TAUS DQF MQM, or SEA J2450 that you can look up and employ.

When thinking about which LQA model you should use, you should consider your environment and the actions you’ll need to go through to make the model work. A complex model lacking support in your tooling would have a negative impact on your project.

Proper user management

Besides the LQA settings, clear guidelines for working with content will help you a ton with running the whole project smoothly. You want to avoid “accidents”, unintentional mistakes in updating data, such as changing record IDs, corrupting formulas, using old versions, forgetting to copy some parts, revealing parts to the public before launch, and on and on.

Here’s where traditional tools widely used for managing content for translation, such as Google Sheets, or Excel, fail. Their access control has usually two states: all or nothing. Integrating these tools into your flow with proper access rights to manage what users can see, edit, add, or delete is far from straightforward.

Access control with Gridly

Gridly can help you manage access to your project by creating Views within your spreadsheet. Within Views, you can manage which language or content columns are displayed and whether they’re editable or not. You then share Views with Groups of users or even other apps via API.

Users have assigned roles that specify functions they can perform. For example, allowing editing of a column, exporting data, creating other Views, branches, etc. Plus, thanks to automated tracking of changes, a complete history of actions is available instantly on a cell level.

Proper issue management

Besides managing access control to content, an important layer of guidance, you also need to manage localization issues found by your testers. Your environment needs to be capable of managing the statuses of the LQA tickets and passing them over to other teams to fix them.

Some issues are solved instantly, others remain open for a long time and need a deep dive into what’s preventing them from being resolved. Having a ticketing system managing the issue statuses will help you focus on what still needs to be done to declare your project localization issue-free.

#4: Getting content for LQA together at speed

LQA testing of web and mobile applications, games, services, and other various types of digital products commonly contains thousands of lines of text, matching audio files, videos, or screenshots recommended earlier. All of that for a multitude of languages.

Usually, this data is stored in a folder structure with filenames and metadata depicting what they’re referencing. The time spent by the team sifting through these files, trying to find the one that is needed is something that knocks down the efficiency of your process.

At the beginning of your project, the assets you need for testing might be scattered around in different silos and might exist in various versions. Therefore, a single place that is easily accessible, usable, and gives you a neat structure in which you can store all your content will kick the project off in a successful trajectory.

The benefits of LQA combining texts and screenshots, the linguistic and visual LQA, were already discussed but how can you get all this together in reality? Isn’t there more work with gathering the texts and related screenshots than benefits from using it?

Gathering texts and screenshots for LQA with Gridly

Importing string data to Gridly is pretty straightforward. It has support for a plethora of file formats, including JSON or PO files, and an easy importing interface. Besides manual import, you can use one of the out-of-the-box integrations, or connect through API.

Besides text, Gridly supports various file formats and can display them in the very same row with related text. You can have multiple of them in one cell if needed, preview them, or even play them if these are media files.

You can upload them one by one to cells but that wouldn’t be a way for devoted LQA streamliners. Folder upload enables you to upload multiple files at once from a folder and its subfolders to corresponding records in Gridly. You can simply choose a column to upload the files to and select a folder on your machine.

Gridly pairs the names of your files with records in a column of your choice. If you have subfolders in the folder you’re uploading, you can use Paths in Gridly to determine which records belong to which subfolder. Gridly even checks if the already uploaded files were changed since the last upload to save you some time and data traffic.

How about an automated process? You can streamline the process by creating lambda functions to automate file uploads from the cloud. Whenever a file is added, for example to a Google Drive, and the filename is a match, it can go to your Grid in Gridly to the corresponding record.

Besides Lambda functions, the other common way to automate the process is connecting a back-end solution to Gridly’s API endpoint to push files into our Grid programmatically.

#5: Streamlined issue logging

Your next key to becoming an ace LQA streamliner is pretty straightforward: the ability to log an issue as fast as possible, yet with compliance to your LQA model.

You don’t need to be a mathematician to realize that the larger the scale of your content and the higher the number of logged issues, using a clunky interface for logging those issues would have a negative effect on efficiency.

When a tool has a great UI enabling instant creation of issues and supports processing of these issues, then you’re halfway there.

#6: All we need is (❤) collaboration

Cliché? In the era of an intensely digitized world, not one bit. These days collaboration isn’t important just to support communication between people, but also between their toolsets and the types of assets they operate with.

One typical example could be a developer who exports JSON files, while the translation team can only import CSVs. Or, translators can work only with text but your audio team also wants to assess sound recordings, and on and on. Collaboration is the holy grail for running a successful LQA project.

Support for multiple content types within your LQA environment will help you greatly improve collaboration. From a user’s perspective, import and export need to be easy, yet you need to support the multitude of commonly used formats.

Another layer of collaboration takes place between systems. You can have the best tool for LQA ever, yet when it stands isolated, it will suffer. Long gone are times when integration would take a couple of days for a development team. The new standard, embracing webhooks and API connections, is a couple of clicks.

#7: Tracking and reporting

There’s one more thing to consider, it would be a sad day for your LQA endeavors if you couldn’t assess your outstanding results. Therefore, part of your project must-haves should be an analysis of your quality assessment project and reporting of results.

Needless to say, having a history of data updates throughout your pipeline can be of tremendous help. That could help you deep-dive when tackling unresolved issues and reveal many possible root causes. To get the right data, you need to be tracking and logging as much as possible.

Here’s where traditional spreadsheet tools fail because they don’t provide you with information about who changed which cell and when and how. Although this is the last key of your streamlining effort list, checking the tracking capabilities of your environment had better be one of your first steps.

Granular data is nice but since the end goal is to showcase your results, ideally with a shiny dashboard presented on loads of screens, it’s good to check all the data is accessible in a friendly way for further processing and can be easily exported to your favorite reporting tool.