What is automated localization QA?

Automated localization quality assurance is the process of using computer software to perform various aspects of the localization testing process. Automated LQA frees your testing team to focus on more complex tasks while leaving the repetitive aspects of testing to your software tools.

Should you automate the entire localization testing process?

Localization QA (LQA) is a broad practice that covers multiple disciplines and types of quality checks. While some can be automated, others require the attention and expertise of a trained linguist. Automating the entire testing process can result in an overall quality loss if you aren’t having your translated strings checked by professionals.

When should you use automated localization QA?

Just like when it comes to the choice between machine translation and human translators, automated LQA is best left for simpler, more mundane testing that isn’t reliant on language or regional expertise. Functional issues, missing translations, and other similar problems are ideal candidates for automation.

- Correct language display: Automated testing tools can change a user’s language preferences and verify that the correct language is displayed.

- Navigation: Navigation testing checks to make sure all buttons and links work properly in each language. Automated browser-based tools can navigate your localized website in seconds.

- Untranslated text: Specialized content management platforms built for localization will automatically detect whether a given text string has been translated or not.

- Screenshot collection: You’ll want human testers to verify that all your text strings are displayed correctly - whether they fit in their designated fields, or if they’re appearing in the right place. Automated tools can collect screenshots for your testers to review.

- Form verification: Automated tools can check whether forms on your website function correctly and deliver the submitted information to the right place.

- Smoke testing: Smoke testing is an initial testing phase that looks for severe errors that prevent your product from working properly.

- Payment processing: One part of functionality testing is checking whether local payment methods are correctly implemented. Here, automation can help.

Which parts of the LQA process should be performed manually?

Only a trained language professional can evaluate translations for accuracy and consistency. And you’ll want to trust regional experts to make sure your localized content meets local regulations.

- Linguistic integrity: Machine translation isn’t yet on part with human professionals. Entrust your translation checks to a human.

- Brand tone and voice consistency: Regional branding experts can assess whether your localized content successfully channels your brand in your target markets.

- Text and font display issues: Use an automated tool to collect screenshots, then have human testers review them for display issues.

- Legal and regulatory compliance: Is your data protection appropriate for each region? How about your marketing claims? Only a local legal expert can verify this for sure.

- Cultural analysis: A software tool won’t know whether you’re breaking any regional taboos or using culturally meaningful images and expressions.

What are the benefits of automated localization QA?

Automated localization QA can significantly speed up your localization testing process while ensuring a higher overall degree of quality.

Efficiency

As deadlines loom, it can be tempting to rush or waive LQA in favor of a quicker launch. Automation can free your testers from the repetitive, time-consuming tasks that bog down the overall LQA process.

Without automated QA tools, localization testers would have to play a game in its entirety to verify that all text strings are displayed correctly. The advent of automated screenshot collection changed that. Now, rather than waste time playing a game, testers can simply review screenshots of each string as it appears in a game.

The proof is in the numbers: Angry Birds developer Rovio enjoyed a fourfold increase in testing speed with a simple Unity script that automatically collected screenshots and imported them into Gridly. Testers no longer needed to play through the game themselves or view screenshots in a separate folder.

Learn more Unity game localization QA best practices from Rovio

Fewer errors

Automated QA tools will find every instance of untranslated text, broken functionality, or incorrect code. And without the drudgery of repetitive tasks dragging them down, your testing team will be less fatigued and more focused when it comes to the other aspects of LQA.

Better performance

An automated testing tool can run through your localized app or game in seconds, verifying every link, button, and form in a fraction of the time it’d take a human tester to do the same. The same goes for translation completion - when your localization management platform automatically detects new content, you’ll always know if your translators missed a string.

Common challenges and solutions in automated localization QA

While automated LQA offers significant benefits, implementing it successfully requires navigating several common pitfalls. Understanding these challenges upfront can save your team months of frustration and false starts.

Challenge 1: False positive overload

The problem: Automated tools often flag legitimate localized content as errors, leading to alert fatigue and reduced trust in the system.

Common examples:

- Tools flagging culturally appropriate currency formats (€1.234,56 vs $1,234.56)

- Legitimate character expansion in languages like German being marked as UI overflow

- Date formats triggering “incorrect format” warnings in different locales

- Right-to-left languages causing layout “errors” in automated screenshot analysis

Solutions:

- Create locale-specific rule sets: Configure your tools with region-appropriate validation criteria. For example, set different character limits for German UI elements (typically 30% longer than English).

- Implement smart filtering: Use machine learning models trained on your content to reduce false positives over time.

- Establish baseline testing: Run initial tests on known-good localized content to calibrate your tools and establish acceptable parameters.

- Regular rule maintenance: Schedule quarterly reviews of your automated rules to remove outdated or overly sensitive checks.

Challenge 2: Test maintenance overhead

The problem: As your product evolves, automated tests become outdated and require constant updates, sometimes taking more time than manual testing.

Root causes:

- UI changes breaking automated navigation scripts

- New features requiring updated test scenarios

- Content structure changes invalidating existing checks

- Multi-platform testing requiring separate maintenance for each environment

Solutions:

- Design for testability: Work with development teams to implement stable element IDs and consistent naming conventions that survive UI updates.

- Modular test architecture: Build tests in small, reusable components rather than monolithic scripts. When one element changes, you only update one module.

- Version-controlled test data: Maintain test scenarios alongside your codebase so they evolve together.

- Automated test validation: Create “tests for your tests” that verify your LQA automation is still working correctly after product updates.

Challenge 3: Scaling across languages and markets

The problem: What works for 5 languages becomes unmanageable for 25+ languages, especially when dealing with diverse writing systems and cultural contexts.

Scaling pain points:

- Processing time increases exponentially with language count

- Different languages require different types of validation

- Managing test results across dozens of locales becomes overwhelming

- Resource allocation becomes complex when some languages need more intensive testing

Solutions:

- Tiered testing approach: Implement different automation levels based on market importance and language complexity. Tier 1 markets get comprehensive automation, while Tier 3 markets use lighter, rule-based checks.

- Language family groupings: Create test templates for language families (Romance, Germanic, etc.) rather than building from scratch for each language.

- Parallel processing architecture: Invest in infrastructure that can run multiple language tests simultaneously rather than sequentially.

- Smart prioritization: Use analytics data to focus automated testing on the most user-impacted content areas for each market.

Challenge 4: Integration complexity

The problem: Automated LQA tools often don’t integrate smoothly with existing localization workflows, creating data silos and manual handoffs.

Integration bottlenecks:

- Translation management systems that don’t communicate with QA tools

- Inconsistent data formats between different tools in the localization stack

- Manual export/import processes that defeat automation benefits

- Separate reporting systems that don’t provide unified quality metrics

Solutions:

- API-first tool selection: Choose tools with robust APIs that can communicate with each other programmatically.

- Single source of truth: Establish one platform as your content hub and ensure all other tools sync with it bidirectionally.

- Unified reporting dashboard: Create a centralized view that aggregates quality metrics from all your automated tools.

- Webhook automation: Set up automated triggers so that translation completion automatically initiates QA testing, and QA completion triggers review assignments.

Choosing the right platform for automated LQA

The success of your automated localization QA depends heavily on selecting a localization QA tool that can grow with your needs and integrate seamlessly with your existing software.

Key platform requirements

- Quality check coverage: Look for platforms that automate technical validation (file formats, character encoding, placeholder verification), content quality assessment (terminology consistency, cultural appropriateness), and functional testing (cross-browser compatibility, form verification, payment gateway testing).

- Integration capabilities: Your platform should connect with your existing translation management system through bidirectional data sync and provide real-time status updates. Essential integrations include version control systems (Git), CI/CD pipelines, and project management tools (Jira, Azure DevOps).

- Scalability features: As you expand to more languages, prioritize platforms with parallel processing capabilities, language-specific rule sets, and smart resource allocation that uses machine learning to prioritize checks based on content importance.

- User experience: The best platforms present quality issues alongside context - original strings, translations, screenshots- in a single interface. Look for built-in communication tools and customizable quality metrics that match your organization’s priorities.

- Analytics and reporting: Data-driven localization requires historical quality tracking, predictive analytics to identify high-risk content areas, and ROI measurement tools that demonstrate automation value.

How Gridly delivers on these requirements

As a content management platform built specifically with localization in mind, Gridly addresses these critical requirements while offering unique advantages:

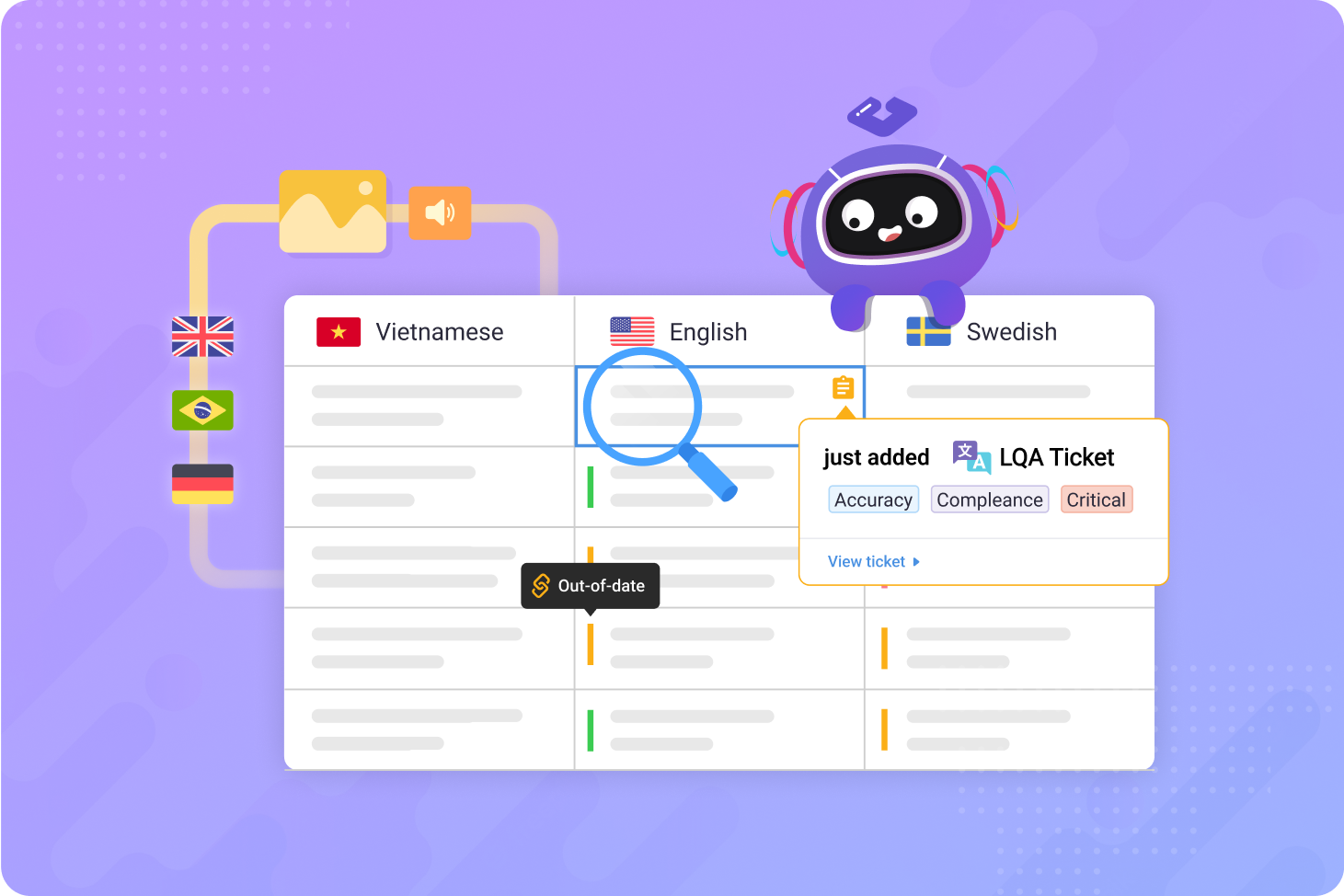

Comprehensive quality management with Auto QA: Gridly’s Auto QA feature allows you to set up automated rules against which translations are checked, covering everything from spelling and grammar to terminology consistency and formatting issues.

Advanced error detection with lexiQA: The lexiQA add-on helps detect localization errors automatically, reducing time spent on manual QA checks and labor costs. This integration runs automated QA checks to detect spelling, symbols, numerals, or punctuation issues, displaying errors and inconsistencies directly in your target languages with highlighting and fix suggestions.

Visual context integration in Grids: Gridly’s Grids provide a spreadsheet-like interface for managing content where you can import screenshots directly next to relevant strings. This structured content approach ensures every record contains precisely the information needed in a ready-to-use form, with unique IDs and consistent structure across thousands of content objects.

In-depth QA reporting: Mark localization QA issues using the ticket system, then export them to create detailed reports. Filter LQA issues in the Ticket center and export tickets for comprehensive quality analysis.

Scalable architecture: Whether managing 3 languages or 30, Gridly’s infrastructure scales seamlessly. Push content from your Grids to the TMS module, where it’s broken down into tasks for translators to work on in the CAT editor.

Integration ecosystem: Gridly connects with translation memories, CAT tools like Phrase TMS and memoQ, project management systems, and development environments including Unity and Unreal Engine, creating a unified localization ecosystem rather than another isolated tool.

Ready to transform your multilingual content management? Schedule a demo today to see how Gridly can work for your specific needs or sign up for a 14-day free trial to experience it yourself.